Welcome to hvisor!

Hello~

Welcome to hvisor!

hvisor is a lightweight Type-1 virtual machine monitor written in Rust, offering efficient resource management and low-overhead virtualization performance.

Features

- Cross-platform support: Supports multiple architectures including AARCH64, RISC-V, and LoongArch.

- Lightweight: Focuses on core virtualization features, avoiding unnecessary complexity found in traditional virtualization solutions, suitable for resource-constrained environments.

- Efficient: Runs directly on hardware without going through an OS layer, providing near-native performance.

- Security: Rust is known for its memory safety and concurrent programming model, helping to reduce common system-level programming errors such as memory leaks and data races.

- Fast startup: Designed to be simple with a short startup time, suitable for scenarios that require rapid deployment of virtualization.

Main Functions

- Virtual Machine Management: Provides basic management functions for creating, starting, stopping, and deleting virtual machines.

- Resource Allocation and Isolation: Supports efficient allocation and management of CPU, memory, and I/O devices, using virtualization technology to ensure isolation between different virtual machines, enhancing system security and stability.

Use Cases

- Edge Computing: Suitable for running on edge devices, providing virtualization support for IoT and edge computing scenarios.

- Development and Testing: Developers can quickly create and destroy virtual machine environments for software development and testing.

- Security Research: Provides an isolated environment for security research and malware analysis.

hvisor supported hardware platforms

2025.3.18

aarch64

- QEMU virt aarch64

- NXP i.MX8MP

- Xilinx Ultrascale+ MPSoC ZCU102

- Rockchip RK3588

- Rockchip RK3568

- Forlinx OK6254-C

riscv64

- QEMU virt riscv64

- FPGA Xiangshan (Kunming Lake) on S2C Prodigy S7-19PS-2

- FPGA RocketChip on Xilinx Ultrascale+ MPSoC ZCU102

loongarch64

- Loongson 3A5000+7A2000

- Loongson 3A6000

hvisor Hardware Adaptation Development Manual 🧑🏻💻

wheatfox (wheatfox17@icloud.com) 2025.3.17

Design Principles

- Code and board configuration separation: No

platform_xxxrelatedcfgshould appear insidesrcof hvisor itself. - Platform independence: Introduce the previous hvisor-deploy architecture, orderly store information about various architectures and boards in the

platformdirectory. - Board directory index:

- Uniformly use

platform/$ARCH/$BOARDas the dedicated directory for the board. - Each board's unique BID (Board ID) adopts the

ARCH/BOARDformat, such asaarch64/qemu-gicv3.

- Uniformly use

- Simplified compilation: Support using

BID=xxx/xxxto directly specify the board, while also compatible withARCH=xxx BOARD=xxxstyle. - Structured configuration: Each board directory contains the following files:

linker.ld- Link scriptplatform.mk- QEMU startup Makefile andhvisor.binhandlingboard.rs- Board definition Rust codeconfigs/- JSON configurations for hvisor-tool startup zonescargo/features- Specific cargo features corresponding to the board, including drivers, functions, etc.config.template.toml- Template for.cargo/config, maintained by each board

test/- (Optional) QEMU related test code, including unit tests, system tests, etc.image/- Startup file directory, containing multiple subdirectories:bootloader/- (Optional) Used for local QEMU operation and unittest/systemtest testingdts/- (Optional) Device tree source files for zones 0, 1, 2, …its/- (Optional) Used for generating U-Boot FIT image (hvisor aarch64 zcu102)acpi/- (Optional) ACPI device tree source code for x86 platform (hvisor x86_64)kernel/- (Optional) Kernel Image suitable for the target platformvirtdisk/- (Optional) Virtual disk files, such as rootfs, etc.

Code Implementation Details

Auto-generate .cargo/config.toml

- Generated by

tools/gen_cargo_config.sh, ensuring dynamic updates to thelinker.ldconfiguration. config.template.tomluses placeholders like__ARCH__,__BOARD__, replaced bygen_cargo_config.shto generate.cargo/config.toml.

build.rs Automatically Symlink board.rs

build.rsis responsible for symlinkingplatform/$ARCH/$BOARD/board.rstosrc/platform/__board.rs.- Avoids Makefile handling, triggered only when

envvariables change, reducing unnecessary full recompilations.

Select drivers through Cargo features

- Avoid

platform_xxxdirectly appearing insrc/, switch to configuration based onfeatures. cargo/featuresuniformly stores configurations of board drivers, functions, etc.

Overview of features Corresponding to Each Board

| BOARD ID | FEATURES |

|---|---|

aarch64/qemu-gicv3 | gicv3 pl011 iommu pci pt_layout_qemu |

aarch64/qemu-gicv2 | gicv2 pl011 iommu pci pt_layout_qemu |

aarch64/imx8mp | gicv3 imx_uart |

aarch64/zcu102 | gicv2 xuartps |

riscv64/qemu-plic | plic |

riscv64/qemu-aia | aia |

loongarch64/ls3a5000 | loongson_chip_7a2000 loongson_uart loongson_cpu_3a5000 |

loongarch64/ls3a6000 | loongson_chip_7a2000 loongson_uart loongson_cpu_3a6000 |

aarch64/rk3588 | gicv3 uart_16550 uart_addr_rk3588 pt_layout_rk |

aarch64/rk3568 | gicv3 uart_16550 uart_addr_rk3568 pt_layout_rk |

x86_64/qemu |

Development and Compilation Guide

Compile Different Boards

make ARCH=aarch64 BOARD=qemu-gicv3

make BID=aarch64/qemu-gicv3 # Use BID shorthand

make BID=aarch64/imx8mp

make BID=loongarch64/ls3a5000

make BID=x86_64/qemu

Adapt New Boards

- Determine

features: Refer to existingfeaturesfor classification, add required drivers and configurations. - Create

platform/$ARCH/$BOARDdirectory:- Add

linker.ld,board.rs,features, etc.

- Add

- Compile Test:

make BID=xxx/new_board

features Design Principles

- Minimize hierarchy:

- For example,

cpu-a72instead ofboard_xxx, to facilitate reuse across multiple boards.

- For example,

- Clear driver/function classification:

irqchip(gicv3,plic, ...)uart(pl011,imx_uart, ...)iommu,pci,pt_layout_xxx, ...

Running hvisor on QEMU

1. Install Cross Compiler aarch64-none-linux-gnu-10.3

URL: https://developer.arm.com/downloads/-/gnu-a

Tool selection: AArch64 GNU/Linux target (aarch64-none-linux-gnu)

wget https://armkeil.blob.core.windows.net/developer/Files/downloads/gnu-a/10.3-2021.07/binrel/gcc-arm-10.3-2021.07-x86_64-aarch64-none-linux-gnu.tar.xz

tar xvf gcc-arm-10.3-2021.07-x86_64-aarch64-none-linux-gnu.tar.xz

ls gcc-arm-10.3-2021.07-x86_64-aarch64-none-linux-gnu/bin/

Installation complete, remember the path, for example: /home/tools/gcc-arm-10.3-2021.07-x86_64-aarch64-none-linux-gnu/bin/aarch64-none-linux-gnu-, this path will be used later.

2. Compile and Install QEMU 9.0.1

Note, QEMU needs to be switched from 7.2.12 to 9.0.1 for proper use of PCI virtualization

# Install required dependencies for compilation

sudo apt install autoconf automake autotools-dev curl libmpc-dev libmpfr-dev libgmp-dev \

gawk build-essential bison flex texinfo gperf libtool patchutils bc \

zlib1g-dev libexpat-dev pkg-config libglib2.0-dev libpixman-1-dev libsdl2-dev \

git tmux python3 python3-pip ninja-build

# Download source code

wget https://download.qemu.org/qemu-9.0.1.tar.xz

# Extract

tar xvJf qemu-9.0.1.tar.xz

cd qemu-9.0.1

# Generate configuration file

./configure --enable-kvm --enable-slirp --enable-debug --target-list=aarch64-softmmu,x86_64-softmmu

# Compile

make -j$(nproc)

Then edit the ~/.bashrc file, add a few lines at the end of the file:

# Please note, the parent directory of qemu-9.0.1 can be flexibly adjusted according to your actual installation location. Also, it needs to be placed at the beginning of the $PATH variable.

export PATH=/path/to/qemu-7.2.12/build:$PATH

Afterward, you can update the system path in the current terminal by source ~/.bashrc or simply restart a new terminal. At this point, you can confirm the qemu version, if it displays qemu-9.0.1, it means the installation was successful:

qemu-system-aarch64 --version # Check version

Note, the above dependency packages may not be complete, for example:

- If

ERROR: pkg-config binary 'pkg-config' not foundappears, you can install thepkg-configpackage;- If

ERROR: glib-2.48 gthread-2.0 is required to compile QEMUappears, you can install thelibglib2.0-devpackage;- If

ERROR: pixman >= 0.21.8 not presentappears, you can install thelibpixman-1-devpackage.

If you encounter an error ERROR: Dependency "slirp" not found, tried pkgconfig during the generation of the configuration file:

Download the https://gitlab.freedesktop.org/slirp/libslirp package and install it according to the readme.

3. Compile Linux Kernel 5.4

Before compiling the root linux image, change the CONFIG_IPV6 and CONFIG_BRIDGE config in the .config file to y, to support creating bridges and tap devices in root linux. The specific operations are as follows:

git clone https://github.com/torvalds/linux -b v5.4 --depth=1

cd linux

git checkout v5.4

# Modify the CROSS_COMPILE path according to the path of the cross compiler installed in the first step

make ARCH=arm64 CROSS_COMPILE=/root/gcc-arm-10.3-2021.07-x86_64-aarch64-none-linux-gnu/bin/aarch64-none-linux-gnu- defconfig

# Add a line in .config

CONFIG_BLK_DEV_RAM=y

# Modify two CONFIG parameters in .config

CONFIG_IPV6=y

CONFIG_BRIDGE=y

# Compile, modify the CROSS_COMPILE path according to the path of the cross compiler installed in the first step

make ARCH=arm64 CROSS_COMPILE=/root/gcc-arm-10.3-2021.07-x86_64-aarch64-none-linux-gnu/bin/aarch64-none-linux-gnu- Image -j$(nproc)

If you encounter an error during the compilation of linux:

/usr/bin/ld: scripts/dtc/dtc-parser.tab.o:(.bss+0x20): multiple definition of `yylloc'; scripts/dtc/dtc-lexer.lex.o:(.bss+0x0): first defined hereThen modify

scripts/dtc/dtc-lexer.lex.cunder the linux folder, addexternbeforeYYLTYPE yylloc;. Recompile, if you encounter an error: openssl/bio.h: No such file or directory, then executesudo apt install libssl-dev

After compilation, the kernel file is located at: arch/arm64/boot/Image. Remember the entire linux folder's path, for example: home/korwylee/lgw/hypervisor/linux, we will use this path again in step 7.

4. Build File System Based on Ubuntu 22.04 Arm64 Base

You can skip this part and directly download the ready-made disk image for use. https://blog.syswonder.org/#/2024/20240415_Virtio_devices_tutorial

We use ubuntu 22.04 to build the root file system.

Ubuntu 20.04 can also be used, but it will report an error of low glibc version when running, you can refer to the solution in the comment section of ARM64-qemu-jailhouse.

wget http://cdimage.ubuntu.com/ubuntu-base/releases/22.04/release/ubuntu-base-22.04.5-base-arm64.tar.gz

mkdir rootfs

# Create a 1G size ubuntu.img, you can modify the count to change the img size

dd if=/dev/zero of=rootfs1.img bs=1M count=1024 oflag=direct

mkfs.ext4 rootfs1.img

# Put ubuntu.tar.gz into the ubuntu.img that has been mounted to rootfs

sudo mount -t ext4 rootfs1.img rootfs/

sudo tar -xzf ubuntu-base-22.04.5-base-arm64.tar.gz -C rootfs/

# Let rootfs bind and get some information and hardware from the physical machine

# qemu-path is your qemu path

sudo cp qemu-path/build/qemu-system-aarch64 rootfs/usr/bin/

sudo cp /etc/resolv.conf rootfs/etc/resolv.conf

sudo mount -t proc /proc rootfs/proc

sudo mount -t sysfs /sys rootfs/sys

sudo mount -o bind /dev rootfs/dev

sudo mount -o bind /dev/pts rootfs/dev/pts

# Executing this command may report an error, please refer to the solution below

sudo chroot rootfs

apt-get update

apt-get install git sudo vim bash-completion \

kmod net-tools iputils-ping resolvconf ntpdate screen

# The following content surrounded by # can be done or not done

###################

adduser arm64

adduser arm64 sudo

echo "kernel-5_4" >/etc/hostname

echo "127.0.0.1 localhost" >/etc/hosts

echo "127.0.0.1 kernel-5_4">>/etc/hosts

dpkg-reconfigure resolvconf

dpkg-reconfigure tzdata

###################

exit

sudo umount rootfs/proc

sudo umount rootfs/sys

sudo umount rootfs/dev/pts

sudo umount rootfs/dev

sudo umount rootfs

Finally, unmount the mounts to complete the production of the root file system.

When executing

sudo chroot ., if an errorchroot: failed to run command ‘/bin/bash’: Exec format erroroccurs, you can execute the command:sudo apt-get install qemu-user-static sudo update-binfmts --enable qemu-aarch64

5. Rust Environment Configuration

Please refer to: Rust Language Bible

6. Compile and Run hvisor

First, pull the hvisor code repository to the local machine, then switch to the dev branch, and in the hvisor/images/aarch64 folder, put the previously compiled root file system and Linux kernel image respectively in the virtdisk and kernel directories, and rename them to rootfs1.ext4 and Image respectively.

Second, prepare the configuration files. Take the virtio-blk&console example as an example, this directory contains 6 files, perform the following operations on these 6 files:

- linux1.dts: Root Linux's device tree, hvisor will use it when starting.

- linux2.dts: Zone1 Linux's device tree, hvisor-tool will need it when starting zone1. Replace linux1.dts and linux2.dts in the devicetree directory with the same name files, and execute

make allto compile, obtaining linux1.dtb and linux2.dtb. - qemu_aarch64.rs, qemu-aarch64.mk directly replace the files with the same name in the hvisor repository.

Then, in the hvisor directory, execute:

make ARCH=aarch64 LOG=info BOARD=qemu-gicv3 run # or use BOARD=qemu-gicv2

Afterward, you will enter the uboot startup interface, under this interface execute:

bootm 0x40400000 - 0x40000000

This boot command will start hvisor from the physical address 0x40400000, 0x40000000 is essentially no longer used, but is still retained for historical reasons. When hvisor starts, it will automatically start root linux (used for management), and enter the shell interface of root linux, root linux is zone0, taking on management tasks.

When prompted for missing

dtc, you can execute the command:sudo apt install device-tree-compiler

7. Start zone1-linux Using hvisor-tool

First, complete the compilation of the latest version of hvisor-tool. For specific instructions, please refer to the README of hvisor-tool. For example, if you want to compile a command-line tool for arm64, and the source code of the Linux image in the Hvisor environment is located at ~/linux, you can execute

make all ARCH=arm64 LOG=LOG_WARN KDIR=~/linux

Please make sure that the Root Linux image in Hvisor is compiled from the Linux source directory specified in the options when compiling hvisor-tool.

After compilation, copy driver/hvisor.ko, tools/hvisor to the directory where zone1 linux starts in the image/virtdisk/rootfs1.ext4 root file system (for example, /same_path/); then put the kernel image of zone1 (if it is the same Linux as zone0, just copy a copy of image/aarch64/kernel/Image), and the device tree (image/aarch64/linux2.dtb) in the same directory (/same_path/), and rename them to Image and linux2.dtb.

Then you need to create a root file system for Zone1 linux. You can copy a copy of rootfs1.ext4 in image/aarch64/virtdisk, or repeat step 4 (preferably reduce the image size), and rename it to rootfs2.etx4. Then put rootfs2.ext4 in the same directory as rootfs1.ext4 (/same_path/).

If the capacity of rootfs1.ext4 is not enough, you can refer to img expansion to expand rootfs1.ext4.

Then on QEMU, you can start zone1-linux through root linux-zone0.

For detailed steps to start zone1-linux, refer to the README of hvisor-tool and the startup example

Install qemu

Install QEMU 9.0.2:

wget https://download.qemu.org/qemu-9.0.2.tar.xz

# Unzip

tar xvJf qemu-9.0.2.tar.xz

cd qemu-9.0.2

# Configure Riscv support

./configure --target-list=riscv64-softmmu,riscv64-linux-user

make -j$(nproc)

# Add to environment variable

export PATH=$PATH:/path/to/qemu-9.0.2/build

# Test if installation was successful

qemu-system-riscv64 --version

Install cross-compiler

The Riscv cross-compiler needs to be obtained and compiled from riscv-gnu-toolchain.

# Install necessary tools

sudo apt-get install autoconf automake autotools-dev curl python3 python3-pip libmpc-dev libmpfr-dev libgmp-dev gawk build-essential bison flex texinfo gperf libtool patchutils bc zlib1g-dev libexpat-dev ninja-build git cmake libglib2.0-dev libslirp-dev

git clone https://github.com/riscv/riscv-gnu-toolchain

cd riscv-gnu-toolchain

git rm qemu

git submodule update --init --recursive

# The above operation will occupy more than 5GB of disk space

# If git reports a network error, you can execute:

git config --global http.postbuffer 524288000

Then start compiling the toolchain:

cd riscv-gnu-toolchain

mkdir build

cd build

../configure --prefix=/opt/riscv64

sudo make linux -j $(nproc)

# After compilation, add the toolchain to the environment variable

echo 'export PATH=/opt/riscv64/bin:$PATH' >> ~/.bashrc

source ~/.bashrc

This will get the riscv64-unknown-linux-gnu toolchain.

Compile Linux

git clone https://github.com/torvalds/linux -b v6.2 --depth=1

cd linux

git checkout v6.2

make ARCH=riscv CROSS_COMPILE=riscv64-unknown-linux-gnu- defconfig

make ARCH=riscv CROSS_COMPILE=riscv64-unknown-linux-gnu- modules -j$(nproc)

# Start compiling

make ARCH=riscv CROSS_COMPILE=riscv64-unknown-linux-gnu- Image -j$(nproc)

Make Ubuntu root filesystem

wget http://cdimage.ubuntu.com/ubuntu-base/releases/20.04/release/ubuntu-base-20.04.2-base-riscv64.tar.gz

mkdir rootfs

dd if=/dev/zero of=riscv_rootfs.img bs=1M count=1024 oflag=direct

mkfs.ext4 riscv_rootfs.img

sudo mount -t ext4 riscv_rootfs.img rootfs/

sudo tar -xzf ubuntu-base-20.04.2-base-riscv64.tar.gz -C rootfs/

sudo cp /path-to-qemu/build/qemu-system-riscv64 rootfs/usr/bin/

sudo cp /etc/resolv.conf rootfs/etc/resolv.conf

sudo mount -t proc /proc rootfs/proc

sudo mount -t sysfs /sys rootfs/sys

sudo mount -o bind /dev rootfs/dev

sudo mount -o bind /dev/pts rootfs/dev/pts

sudo chroot rootfs

# After entering chroot, install necessary packages:

apt-get update

apt-get install git sudo vim bash-completion \

kmod net-tools iputils-ping resolvconf ntpdate

exit

sudo umount rootfs/proc

sudo umount rootfs/sys

sudo umount rootfs/dev/pts

sudo umount rootfs/dev

sudo umount rootfs

Run hvisor

Place the prepared root filesystem and Linux kernel image in the specified location in the hvisor directory, then execute make run ARCH=riscv64 in the hvisor root directory

By default, it uses PLIC, execute make run ARCH=riscv64 IRQ=aia to enable AIA specification

Start non-root linux

Use hvisor-tool to generate the hvisor.ko file, then you can start zone1-linux through root linux-zone0 on QEMU.

After starting root linux, execute in the /home directory

sudo insmod hvisor.ko

rm nohup.out

mkdir -p /dev/pts

mount -t devpts devpts /dev/pts

nohup ./hvisor zone start linux2-aia.json && cat nohup.out | grep "char device" && script /dev/null

Booting hvisor on NXP-IMX8MP

Date: 2024/2/25

Updated: 2025/3/7

Authors: Yang Junyi, Chen Xingyu, Li Guowei, Chen Linkun

1. Download the Linux source code provided by the manufacturer

https://pan.baidu.com/s/1XimrhPBQIG5edY4tPN9_pw?pwd=kdtk Extraction code: kdtk

Enter the Linux/sources/ directory, download the three compressed files OK8MP-linux-sdk.tar.bz2.0*, and after downloading, execute:

cd Linux/sources

# Merge split compressed files

cat OK8MP-linux-sdk.tar.bz2.0* > OK8MP-linux-sdk.tar.bz2

# Unzip the merged compressed file

tar -xvjf OK8MP-linux-sdk.tar.bz2

After unzipping, the OK8MP-linux-kernel directory is the Linux source code directory.

2. Compile Linux source code

Install cross-compilation tools

-

Download the cross-compilation toolchain:

wget https://armkeil.blob.core.windows.net/developer/Files/downloads/gnu-a/10.3-2021.07/binrel/gcc-arm-10.3-2021.07-x86_64-aarch64-none-linux-gnu.tar.xz -

Unzip the toolchain:

tar xvf gcc-arm-10.3-2021.07-x86_64-aarch64-none-linux-gnu.tar.xz -

Add the path so that

aarch64-none-linux-gnu-*can be used directly, modify the~/.bashrcfile:echo 'export PATH=$PWD/gcc-arm-10.3-2021.07-x86_64-aarch64-none-linux-gnu/bin:$PATH' >> ~/.bashrc source ~/.bashrc

Compile Linux

-

Switch to the Linux kernel source code directory:

cd Linux/sources/OK8MP-linux-sdk -

Execute the compilation command:

# Set Linux kernel configuration make OK8MP-C_defconfig ARCH=arm64 CROSS_COMPILE=aarch64-none-linux-gnu- # Compile the Linux kernel make ARCH=arm64 CROSS_COMPILE=aarch64-none-linux-gnu- Image -j$(nproc) # Copy the compiled image to the tftp directory cp arch/arm64/boot/Image ~/tftp/

Create a tftp directory here for later image organization and for using tftp to transfer images as mentioned in the appendix.

3. Prepare the SD card

-

Insert the SD card into the card reader and connect it to the host.

-

Switch to the Linux/Images directory.

-

Execute the following commands for partitioning:

fdisk <$DRIVE> d # Delete all partitions n # Create a new partition p # Choose primary partition 1 # Partition number 1 16384 # Starting sector t # Change partition type 83 # Select Linux filesystem (ext4) w # Save and exit -

Write the boot file to the SD card boot disk:

dd if=imx-boot_4G.bin of=<$DRIVE> bs=1K seek=32 conv=fsync -

Format the first partition of the SD card boot disk as ext4:

mkfs.ext4 <$DRIVE>1 -

Remove the SD card reader and reconnect. Extract the root file system rootfs.tar to the first partition of the SD card. The rootfs.tar can be made by referring to qemu-aarch64 or using the image below.

tar -xvf rootfs.tar -C <path/to/mounted/SD/card/partition>

rootfs.tar download address:

https://disk.pku.edu.cn/link/AADFFFE8F568DE4E73BE24F5AED54B00EB

Filename: rootfs.tar

- After completion, eject the SD card.

4. Compile hvisor

- Organize the configuration files

Place the configuration files where they belong. Sample configuration files can be referred to here.

- Compile hvisor

Enter the hvisor directory, switch to the main branch or dev branch, and execute the compilation command:

make ARCH=aarch64 FEATURES=platform_imx8mp,gicv3 LOG=info all

# Put the compiled hvisor image into tftp

make cp

5. Boot hvisor and root linux

Before booting the NXP board, transfer the files from the tftp directory to the SD card, such as to the /home/arm64 directory on the SD card. The files in the tftp directory include:

- Image: root linux image, can also be used as non-root linux image

- linux1.dtb, linux2.dtb: device trees for root linux and non-root linux

- hvisor.bin: hvisor image

- OK8MP-C.dtb: This is used for some checks during uboot boot, essentially useless, can be obtained from here OK8MP-C.dts

Boot the NXP board:

- Adjust the dip switches to enable SD card boot mode: (1,2,3,4) = (ON,ON,OFF,OFF).

- Insert the SD card into the SD slot.

- Connect the development board to the host using a serial cable.

- Open the serial port with terminal software

After booting the NXP board, there should be output on the serial port. Restart the development board, immediately press and hold the spacebar to make uboot enter the command line terminal, and execute the following command:

setenv loadaddr 0x40400000; setenv fdt_addr 0x40000000; setenv zone0_kernel_addr 0xa0400000; setenv zone0_fdt_addr 0xa0000000; ext4load mmc 1:1 ${loadaddr} /home/arm64/hvisor.bin; ext4load mmc 1:1 ${fdt_addr} /home/arm64/OK8MP-C.dtb; ext4load mmc 1:1 ${zone0_kernel_addr} /home/arm64/Image; ext4load mmc 1:1 ${zone0_fdt_addr} /home/arm64/linux1.dtb; bootm ${loadaddr} - ${fdt_addr};

After execution, hvisor should boot and automatically enter root linux.

6. Boot non-root linux

Booting non-root linux requires hvisor-tool. For details, please refer to the README of hvisor-tool.

Appendix. Convenient image transfer using tftp

Tftp facilitates data transfer between the development board and the host without the need to plug and unplug the SD card each time. The specific steps are as follows:

For Ubuntu systems

If you are using Ubuntu, execute the following steps in sequence:

-

Install TFTP server software package

sudo apt-get update sudo apt-get install tftpd-hpa tftp-hpa -

Configure TFTP server

Create TFTP root directory and set permissions:

mkdir -p ~/tftp sudo chown -R $USER:$USER ~/tftp sudo chmod -R 755 ~/tftpEdit the tftpd-hpa configuration file:

sudo nano /etc/default/tftpd-hpaModify as follows:

# /etc/default/tftpd-hpa TFTP_USERNAME="tftp" TFTP_DIRECTORY="/home/<your-username>/tftp" TFTP_ADDRESS=":69" TFTP_OPTIONS="-l -c -s"Replace

<your-username>with your actual username. -

Start/restart TFTP service

sudo systemctl restart tftpd-hpa -

Verify TFTP server

echo "TFTP Server Test" > ~/tftp/testfile.txttftp localhost tftp> get testfile.txt tftp> quit cat testfile.txtIf "TFTP Server Test" is displayed, the TFTP server is working properly.

-

Configure to start on boot:

sudo systemctl enable tftpd-hpa -

Connect the development board's network port (there are two, please choose the one below) to the host using a network cable. And configure the host's wired network card, ip: 192.169.137.2, netmask: 255.255.255.0.

After booting the development board, enter the uboot command line, and the command becomes:

setenv serverip 192.169.137.2; setenv ipaddr 192.169.137.3; setenv loadaddr 0x40400000; setenv fdt_addr 0x40000000; setenv zone0_kernel_addr 0xa0400000; setenv zone0_fdt_addr 0xa0000000; tftp ${loadaddr} ${serverip}:hvisor.bin; tftp ${fdt_addr} ${serverip}:OK8MP-C.dtb; tftp ${zone0_kernel_addr} ${serverip}:Image; tftp ${zone0_fdt_addr} ${serverip}:linux1.dtb; bootm ${loadaddr} - ${fdt_addr};

Explanation:

setenv serverip 192.169.137.2: Set the IP address of the tftp server.setenv ipaddr 192.169.137.3: Set the IP address of the development board.setenv loadaddr 0x40400000: Set the load address for the hvisor image.setenv fdt_addr 0x40000000: Set the load address for the device tree file.setenv zone0_kernel_addr 0xa0400000: Set the load address for the guest Linux image.setenv zone0_fdt_addr 0xa0000000: Set the load address for the root Linux device tree file.tftp ${loadaddr} ${serverip}:hvisor.bin: Download the hvisor image from the tftp server to the hvisor load address.tftp ${fdt_addr} ${serverip}:OK8MP-C.dtb: Download the device tree file from the tftp server to the device tree file load address.tftp ${zone0_kernel_addr} ${serverip}:Image: Download the guest Linux image from the tftp server to the guest Linux image load address.tftp ${zone0_fdt_addr} ${serverip}:linux1.dtb: Download the root Linux device tree file from the tftp server to the root Linux device tree file load address.bootm ${loadaddr} - ${fdt_addr}: Boot hvisor, load the hvisor image and device tree file.

For Windows systems

You can refer to this article: https://blog.csdn.net/qq_52192220/article/details/142693036

FPGA zcu102

Author: 杨竣轶 (Jerry) github.com/comet959

# Before, Install vivado 2022.2 software

# Ubuntu 20.04 can work fine

sudo apt update

git clone https://github.com/U-interrupt/uintr-rocket-chip.git

cd uintr-rocket-chip

git submodule update --init --recursive

export RISCV=/opt/riscv64

git checkout 98e9e41

vim digilent-vivado-script/config.ini # Env Config

make checkout

make clean

make build

# Use vivado to open the vivado project, then change the top file, run synthesis, run implementation, generate bitstream.

# Connect the zcu102 - Jtag and Uart on your PC.

# Use dd command to flash the image include boot and rootfs part.

# Change the boot button mode to (On Off Off Off)

# Boot the power.

sudo screen /dev/ttyUSB0 115200 # Aarch64 Core Uart

sudo screen /dev/ttyUSB2 115200 # Riscv Core Uart

# On /dev/ttyUSB0

cd uintr-rocket-chip

./load-and-reset.sh

# Focus on ttyUSB2, then you will see the Riscv Linux Boot Msg.

Enable H extension in RocketChip

vim path/to/repo/common/src/main/scala/Configs.scala

// change

class UintrConfig extends Config(

new WithNBigCores(4) ++

new WithNExtTopInterrupts(6) ++

new WithTimebase((BigInt(10000000))) ++ // 10 MHz

new WithDTS("freechips.rocketchip-unknown", Nil) ++

new WithUIPI ++

new WithCustomBootROM(0x10000, "../common/boot/bootrom/bootrom.img") ++

new WithDefaultMemPort ++

new WithDefaultMMIOPort ++

new WithDefaultSlavePort ++

new WithoutTLMonitors ++

new WithCoherentBusTopology ++

new BaseSubsystemConfig

)

// to

class UintrConfig extends Config(

new WithHypervisor ++

new WithNBigCores(4) ++

new WithNExtTopInterrupts(6) ++

new WithTimebase((BigInt(10000000))) ++ // 10 MHz

new WithDTS("freechips.rocketchip-unknown", Nil) ++

new WithUIPI ++

new WithCustomBootROM(0x10000, "../common/boot/bootrom/bootrom.img") ++

new WithDefaultMemPort ++

new WithDefaultMMIOPort ++

new WithDefaultSlavePort ++

new WithoutTLMonitors ++

new WithCoherentBusTopology ++

new BaseSubsystemConfig

)

Booting hvisor on Loongson 3A5000 motherboard (7A2000)

Han Yulu wheatfox17@icloud.com

Updated: 2025.3.24

Step 1: Obtain hvisor source code and compile

First, you need to install the loongarch64-unknown-linux-gnu- toolchain, please download and extract it from https://github.com/sunhaiyong1978/CLFS-for-LoongArch/releases/download/8.0/loongarch64-clfs-8.0-cross-tools-gcc-full.tar.xz, then add the cross-tools/bin directory to your PATH environment variable to ensure tools like loongarch64-unknown-linux-gnu-gcc can be directly called by the shell.

Then clone the code locally:

git clone -b dev https://github.com/syswonder/hvisor

make BID=loongarch64/ls3a5000

After compiling, you can find the stripped hvisor.bin file in the target directory.

Step 2 (without compiling buildroot/linux, etc.): Obtain rootfs/kernel image

Please download the latest released hvisor default Loongson Linux image from https://github.com/enkerewpo/linux-hvisor-loongarch64/releases (including root linux kernel+root linux dtb+root linux rootfs, where root linux rootfs includes non-root linux+nonroot linux dtb+nonroot linux rootfs). The rootfs is already packaged with the non-root startup json as well as hvisor-tool, kernel modules, etc.

Step 2 (compiling buildroot/linux, etc. yourself): Fully compile rootfs/kernel image

If you need to compile it yourself, this process will be more complex, and the details are as follows:

1. Prepare the environment

Create a working directory (optional):

mkdir workspace && cd workspace

git clone -b dev https://github.com/syswonder/hvisor

git clone https://github.com/enkerewpo/buildroot-loongarch64

git clone https://github.com/enkerewpo/linux-hvisor-loongarch64 hvisor-la64-linux

git clone https://github.com/enkerewpo/hvisor-tool

git clone https://github.com/enkerewpo/hvisor_uefi_packer

2. Prepare the buildroot environment

Since buildroot will download source code packages from various places when it cannot find the package to compile, I have prepared a pre-downloaded image:

https://pan.baidu.com/s/1sVPRt0JiExUxFm2QiCL_nA?pwd=la64

After downloading, place the dl directory in the root directory of buildroot-loongarch64, or you can let buildroot download it automatically (which may be very slow). If you still need to download packages after extracting the dl directory, that is normal.

3. Compile buildroot

cd buildroot-loongarch64

make loongson3a5000_hvisor_defconfig

make menuconfig # Please set Toolchain/Toolchain path prefix to your local loongarch64 toolchain path and prefix

# Then select save in the bottom right corner to save to the .config file

make -j$(nproc)

Please note

This process may take several hours, depending on your machine performance and network environment.

4. Compile linux for the first time (to prepare for subsequent make world)

cd hvisor-la64-linux # Currently using linux 6.13.7 by default

./build def # Generate the default root linux defconfig

# ./build nonroot_def # Generate the default nonroot linux defconfig

# ./build menuconfig # If you want to customize the kernel configuration, you can use this command

# (It will modify the .config file in the current directory, please be aware of whether you are modifying the root linux or nonroot linux configuration,

# You can check the content of the .flag file in the root directory is ROOT or NONROOT)

./build kernel # Compile the kernel corresponding to the current .config (may be root linux

# or nonroot linux, depending on ./build def and ./build nonroot_def)

Please note

This process may take several tens of minutes, depending on your machine performance.

5. Execute the make world process through hvisor uefi packer

First, you need to modify the Makefile.1 file in the hvisor_uefi_packer directory, changing variables like HVISOR_LA64_LINUX_DIR to the actual paths:

HVISOR_LA64_LINUX_DIR = ../hvisor-la64-linux

BUILDROOT_DIR = ../buildroot-loongarch64

HVISOR_TOOL_DIR = ../hvisor-tool

Then run:

cd hvisor_uefi_packer

./make_world

A brief introduction to the make_world script process, for specific commands please refer to the Makefile.1 file:

- Compile hvisor-tool, since the kernel module of hvisor-tool needs to be consistent with the kernel version of root linux, so you need to manually compile root linux once first, then make world can successfully compile hvisor-tool.

- Copy the related files of hvisor-tool to the rootfs overlay of buildroot, located in

$(BUILDROOT_DIR)/board/loongson/ls3a5000/rootfs_ramdisk_overlay. - Compile nonroot linux (nonroot currently does not use buildroot, but a simple busybox rootfs), note that the generated

vmlinuxincludes nonroot dtb and busybox rootfs(initramfs) (all embedded in the kernel), and movevmlinux.binto the rootfs overlay of buildroot. Remember the entry address of this nonroot linuxvmlinux, later you can modify thelinux2.jsonfile in the buildroot overlay, writing this entry address in. - Compile buildroot rootfs, this time the rootfs includes the previously compiled nonroot linux vmlinux, as well as the related files of hvisor-tool.

- Compile root linux, the generated

vmlinuxincludes root linux dtb and buildroot rootfs (initramfs), please record this root linuxvmlinuxentry address and file path, which will be used later in hvisor and hvisor uefi packer. - Finish, what we ultimately need is this root linux

vmlinux.bin.

Step 3: Compile UEFI image

Since the 3A5000 and later 3 series CPUs' motherboards use UEFI boot, hvisor can only be booted through the efi image method.

Continuing from the previous step, in the hvisor uefi packer directory, first modify the ./make_image script's HVISOR_SRC_DIR to the actual path where you saved the hvisor source code, then run the compilation script:

make menuconfig # Configure for your local loongarch64 gcc toolchain prefix, hvisor.bin path, vmlinux.bin path

# 1. Modify make_image's HVISOR_SRC_DIR=../hvisor to your actual saved hvisor source code path, then run the script

# 2. Modify BOARD=ls3a5000/ls3a6000 (choose according to your actual board model), the BOARD in the env mentioned later is the same

# ./make_world # See the previous step's description, this step can be skipped if you do not need to recompile buildroot/linux

ARCH=loongarch64 BOARD=ls3a5000 ./make_image

# make_image only compiles hvisor and BOOTLOONGARCH64.EFI

At this point, BOOTLOONGARCH64.EFI will be generated in the hvisor_uefi_packer directory, place it in the /EFI/BOOT/BOOTLOONGARCH64.EFI location of the first FAT32 partition of the USB drive.

Please note

When you compile root and nonroot linux yourself, please manually use readelf to obtain the entry addresses of the two vmlinux files, and write them correspondingly in board.rs and linux2.json, otherwise it will definitely fail to boot.

Step 4: Boot on board

Power on the motherboard, press F12 to enter the UEFI Boot Menu, select to boot from the USB drive, and you will enter hvisor, then automatically enter root linux.

Start nonroot

If you are using the related images provided in the release, after booting, enter in the bash of root linux:

./daemon.sh

./start.sh # Start nonroot, then please manually run screen /dev/pts/0

./start.sh -s # Start nonroot and automatically enter screen

Afterward, nonroot will automatically start (some related configuration files are located in the /tool directory of root linux, including the nonroot zone configuration json provided to hvisor-tool and the virtio configuration json files), then a screen process connected to the nonroot linux virtio-console will automatically open, you will see a bash printed with the nonroot label appear, you can use the CTRL+A D shortcut key to detach during screen (please remember the displayed screen session name / ID), at this time you will return to root linux, if you wish to return to nonroot linux, run

screen -r {the full name of the session just now or just enter the ID at the front}

Afterward, you will return to the bash of nonroot linux.

This catalog is mainly related to ZCU102, and the introduction is as follows:

- How to use Qemu to simulate Xilinx ZynqMP ZCU102

- How to boot hvisor root linux and nonroot linux on Qemu ZCU102 and ZCU102 physical development board.

Qemu ZCU102 hvisor Startup

Ren Hangqi (2572131118@qq.com)

Install Petalinux

- Install Petalinux 2024.1 Please note that this article uses 2024.1 as an example, which does not mean that other versions cannot be used, but other versions have not been verified, and it has been found in tests that Petalinux has a strong dependency on the operating system. Please install the version of Petalinux suitable for your operating system.

- Place the downloaded

petalinux.runfile in the directory where you want to install it, add execution permissions to it, and then run the installer directly with./petalinux.run. - The installer will automatically detect the required environment, and if it does not meet the requirements, it will prompt for the missing environment, which can be installed one by one with

apt install. - After installation, you need to enter the installation directory and manually

source settings.shto add environment variables before using Petalinux each time. If it's too troublesome, you can add this command to~/.bashrc.

Install ZCU102 BSP

- Download the BSP corresponding to the Petalinux version, in the example it is ZCU102 BSP 2024.1

- Activate the Petalinux environment, i.e., in the Petalinux installation directory

source settings.sh. - Create a Petalinux Project based on BSP:

petalinux-create -t project -s xilinx-zcu102-v2024.1-05230256.bsp - This will create a

xilinx-zcu102-2024.1folder, which contains the parameters needed for QEMU to simulate ZCU102 (device tree), as well as pre-compiled Linux images, device trees, Uboot, etc., that can be directly loaded onto the board.

Compile Hvisor

Refer to "Running Hvisor on Qemu" for configuring the environment required to compile Hvisor, then in the hvisor directory, execute:

make ARCH=aarch64 LOG=info BOARD=zcu102 cp

to compile. The directory /target/aarch64-unknown-none (may vary)/debug/hvisor contains the required hvisor image.

Prepare Device Tree

Use Existing Device Tree

In the Hvisor's image/devicetree directory, there is zcu102-root-aarch64.dts, which is a device tree file that has been tested for booting RootLinux. Compile it as follows:

dtc -I dts -O dtb -o zcu102-root-aarch64.dtb zcu102-root-aarch64.dts

If the dtc command is invalid, install the device-tree-compiler.

sudo apt-get install device-tree-compiler

Prepare Device Tree Yourself

If you have custom requirements for the device, it is recommended to prepare the device tree yourself. You can decompile the pre-built/linux/images/system.dtb in the ZCU102 BSP to get a complete device tree, and modify based on zcu102-root-aarch64.dts.

Prepare Image

Use Existing Image

It is recommended to use the pre-built/linux/images/Image from the ZCU102 BSP as the Linux kernel to boot on ZCU102, as its driver configuration is complete.

Compile Yourself

After testing, the support for ZYNQMP in the Linux source code before version 5.15 is not comprehensive, it is not recommended to use versions before this for compilation. For later versions, you can compile directly following the general compilation process, as the basic support for ZYNQMP in the source code is enabled by default. Specific compilation operations are as follows:

- Visit the linux-xlnx official website to download the Linux source code, it is best to download

zynqmp-soc-for-v6.3. tar -xvf zynqmp-soc-for-v6.3to unzip the source code- Enter the unzipped directory, execute the following command using the default configuration,

make ARCH=arm64 CROSS_COMPILE=aarch64-linux-gnu- defconfig - Compile:

make ARCH=arm64 CROSS_COMPILE=aarch64-linux-gnu- Image -j$(nproc) - After compilation, the directory

arch/arm64/boot/Imagecontains the required image.

Enable QEMU Simulation

- Activate the Petalinux environment, i.e., in the Petalinux installation directory

source settings.sh. - Enter the

xilinx-zcu102-2024.1folder, use the following command to start hvisor on the QEMU-simulated ZCU102, where the file paths need to be modified according to your actual situation.

# QEMU parameter passing

petalinux-boot --qemu --prebuilt 2 --qemu-args '-device loader,file=hvisor,addr=0x40400000,force-raw=on -device loader,

file=zcu102-root-aarch64.dtb,addr=0x40000000,force-raw=on -device loader,file=zcu102-root-aarch64.dtb,addr=0x04000000,

force-raw=on -device loader,file=/home/hangqi-ren/Image,addr=0x00200000,force-raw=on -drive if=sd,format=raw,index=1,

file=rootfs.ext4'

# Start hvisor

bootm 0x40400000 - 0x40000000

ZCU102 Board hvisor Multi-Mode Boot

Ren Hangqi (2572131118@qq.com)

Booting Hvisor on ZCU102 Development Board in SD Mode

Prepare the SD Card

- Prepare a standard SD card, partition it into one Boot partition (FAT32) and the rest as file system partitions (EXT4). Windows partitions can be managed using DiskGenius, and Linux partitions can be managed using fdisk, mkfs.

- Prepare a file system and copy its contents to any file system partition, refer to "NXPIMX8" for creating an Ubuntu file system, or directly use the file system in the ZCU102 BSP.

- Copy

zcu102-root-aarch64.dtb,Image,hvisorto the Boot partition. - In SD mode, ATF and Uboot need to be provided from the SD card, thus copy

boot.scrandBOOT.BINfrom the ZCU102 BSP into the BOOT partition.

Booting ZCU102

- Set ZCU102 to SD mode, insert the SD card, connect the serial port, and power on.

- Press any key to interrupt the Uboot auto script execution, run the following commands to start hvisor and root Linux:

fatload mmc 0:1 0x40400000 hvisor;fatload mmc 0:1 0x40000000 zcu102-root-aarch64.dtb

fatload mmc 0:1 0x04000000 zcu102-root-aarch64.dtb;fatload mmc 0:1 0x00200000 Image;bootm 0x40400000 - 0x40000000

- If successful, hvisor and Linux information will be visible on the serial port, ultimately entering the file system.

Booting Hvisor on ZCU102 Development Board in Jtag Mode

First, connect the two cables that come with the board to the JTAG and UART interfaces on the board, and the other end to the PC via USB.

Then, open a petalinux project in the command line, ensure the project has been compiled and generated the corresponding boot files (vmlinux, BOOT.BIN, etc.), then enter the project root directory and run:

petalinux-boot --jtag --prebuilt 2

Where prebuilt represents the boot level:

- Level 1: Only download the FPGA bitstream, boot FSBL and PMUFW

- Level 2: Download the FPGA bitstream and boot UBOOT, and start FSBL, PMUFW, and TF-A (Trusted Firmware-A)

- Level 3: Download and boot Linux, and load or boot FPGA bitstream, FSBL, PMUFW, TF-A, UBOOT

Then, JTAG will download the corresponding files to the board (saving to specified memory addresses) and start the corresponding bootloader. For the official UBOOT default script, refer to the boot.scr file in the project image directory.

Since hvisor requires separate UBOOT commands and a custom-made fitImage to boot, please refer to UBOOT FIT Image Creation, Loading, and Booting.

After creating the fitImage, replace the file (Image.ub) in the petalinux images generation directory so that JTAG loads our custom-made fitImage to the default FIT image loading address configured in the petalinux project. This way, JTAG will load our fitImage through the JTAG line to the corresponding address in the board memory, then extract and bootm through the uboot command line.

Another UART line can be used to observe the output of the ZCU102 board (including FSBL, UBOOT, Linux, etc.), which can be viewed through serial port tools like screen, gtkterm, termius, minicom.

Please Note

Since petalinux has specified some fixed memory addresses, such as the default loading addresses for the Linux kernel, fitImage, DTB (configurable during petalinux compilation), since we need to load and boot a custom-made fitImage, a problem discovered is that if the root Linux dtb's loading address in its matches the loading address during petalinux compilation, it will cause the dtb to be overwritten by the default petalinux dtb, thereby causing root Linux to receive an incorrect dtb and fail to boot. Therefore, it is necessary to specify a different address from the default petalinux dtb/fitImage loading address during compilation to prevent other issues.

References

[1] PetaLinux Tools Documentation: Reference Guide (UG1144).https://docs.amd.com/r/2023.1-English/ug1144-petalinux-tools-reference-guide/Booting-a-PetaLinux-Image-on-Hardware-with-JTAG [2] Trusted Firmware-A Documentation.https://trustedfirmware-a.readthedocs.io/en/latest/

ZCU102 NonRoot Boot

Ren Hangqi (2572131118@qq.com)

- Use the Linux kernel source code used during the Root boot to compile hvisor-tool, the detailed compilation process can refer to Readme.

- Prepare the

virtio_cfg.jsonandzone1_linux.jsonneeded to boot NonRoot, you can directly use theexample/zcu102-aarch64under the hvisor-tool directory, the content has been verified to ensure it can boot. - Prepare the Linux kernel Image, filesystem rootfs, and device tree linux1.dtb required for NonRoot. The kernel and filesystem can be the same as Root, Linux1.dtb is configured as needed, you can also use

images/aarch64/devicetree/zcu102-nonroot-aarch64.dtsunder the hvisor directory. - Copy

hvisor.ko, hvisor, virtio_cfg, zone1_linux.json, linux1.dtb, Image, rootfs.ext4to the filesystem used by Root Linux. - Enter the following commands in RootLinux to start NonRoot:

# Load the kernel module

insmod hvisor.ko

# Create virtio device

nohup ./hvisor virtio start virtio_cfg.json &

# Start NonRoot according to the json configuration file

./hvisor zone start zone1_linux.json

# View the output of NonRoot and interact.

screen /dev/pts/0

For more operational details refer to hvisor-tool Readme

UBOOT FIT Image Creation, Loading, and Booting

wheatfox (wheatfox17@icloud.com)

This article introduces the basic knowledge related to FIT images, as well as how to create, load, and boot FIT images.

ITS Source File

ITS is the source code used by uboot to generate FIT images (FIT Image), which is Image Tree Source, using Device Tree Source (DTS) syntax format. FIT images can be generated using the mkimage tool provided by uboot.

In the ZCU102 port of hvisor, a FIT image is used to package hvisor, root linux, root dtb, etc. into one fitImage, which facilitates booting on QEMU and actual hardware.

The ITS file for the ZCU102 platform is located at scripts/zcu102-aarch64-fit.its:

/dts-v1/;

/ {

description = "FIT image for HVISOR with Linux kernel, root filesystem, and DTB";

images {

root_linux {

description = "Linux kernel";

data = /incbin/("__ROOT_LINUX_IMAGE__");

type = "kernel";

arch = "arm64";

os = "linux";

...

};

...

root_dtb {

description = "Device Tree Blob";

data = /incbin/("__ROOT_LINUX_DTB__");

type = "flat_dt";

...

};

hvisor {

description = "Hypervisor";

data = /incbin/("__HVISOR_TMP_PATH__");

type = "kernel";

arch = "arm64";

...

};

};

configurations {

default = "config@1";

config@1 {

description = "default";

kernel = "hvisor";

fdt = "root_dtb";

};

};

};

Here, __ROOT_LINUX_IMAGE__, __ROOT_LINUX_DTB__, __HVISOR_TMP_PATH__ will be replaced with actual paths by the sed command in the Makefile. In the ITS source code, it is mainly divided into images and configurations sections. The images section defines the files to be packaged, and the configurations section defines how to combine these files. At UBOOT boot time, the files specified in the configurations will be automatically loaded to the designated address according to the default configuration, and multiple configurations can be set to support loading different configurations of images at boot time.

Makefile mkimage corresponding command:

.PHONY: gen-fit

gen-fit: $(hvisor_bin) dtb

@if [ ! -f scripts/zcu102-aarch64-fit.its ]; then \

echo "Error: ITS file scripts/zcu102-aarch64-fit.its not found."; \

exit 1; \

fi

$(OBJCOPY) $(hvisor_elf) --strip-all -O binary $(HVISOR_TMP_PATH)

# now we need to create the vmlinux.bin

$(GCC_OBJCOPY) $(ROOT_LINUX_IMAGE) --strip-all -O binary $(ROOT_LINUX_IMAGE_BIN)

@sed \

-e "s|__ROOT_LINUX_IMAGE__|$(ROOT_LINUX_IMAGE_BIN)|g" \

-e "s|__ROOT_LINUX_ROOTFS__|$(ROOT_LINUX_ROOTFS)|g" \

-e "s|__ROOT_LINUX_DTB__|$(ROOT_LINUX_DTB)|g" \

-e "s|__HVISOR_TMP_PATH__|$(HVISOR_TMP_PATH)|g" \

scripts/zcu102-aarch64-fit.its > temp-fit.its

@mkimage -f temp-fit.its $(TARGET_FIT_IMAGE)

@echo "Generated FIT image: $(TARGET_FIT_IMAGE)"

Booting hvisor and root linux through FIT image in petalinux qemu

Since a fitImage includes all the necessary files, for qemu, it only needs to load this file into an appropriate position in memory through the loader.

Then, when qemu starts and enters UBOOT, you can use the following command to boot (please modify the specific address according to the actual situation, when actually using, you can write all lines into one line and copy it to UBOOT for booting, or save it to the environment variable bootcmd, which requires UBOOT to mount a persistent flash for environment variable storage):

setenv fit_addr 0x10000000; setenv root_linux_load 0x200000;

imxtract ${fit_addr} root_linux ${root_linux_load}; bootm ${fit_addr};

References

[1] Flat Image Tree (FIT). https://docs.u-boot.org/en/stable/usage/fit/

Ren Hangqi (2572131118@qq.com)

Update Time: 2025.4.23

Operations needed for the new board

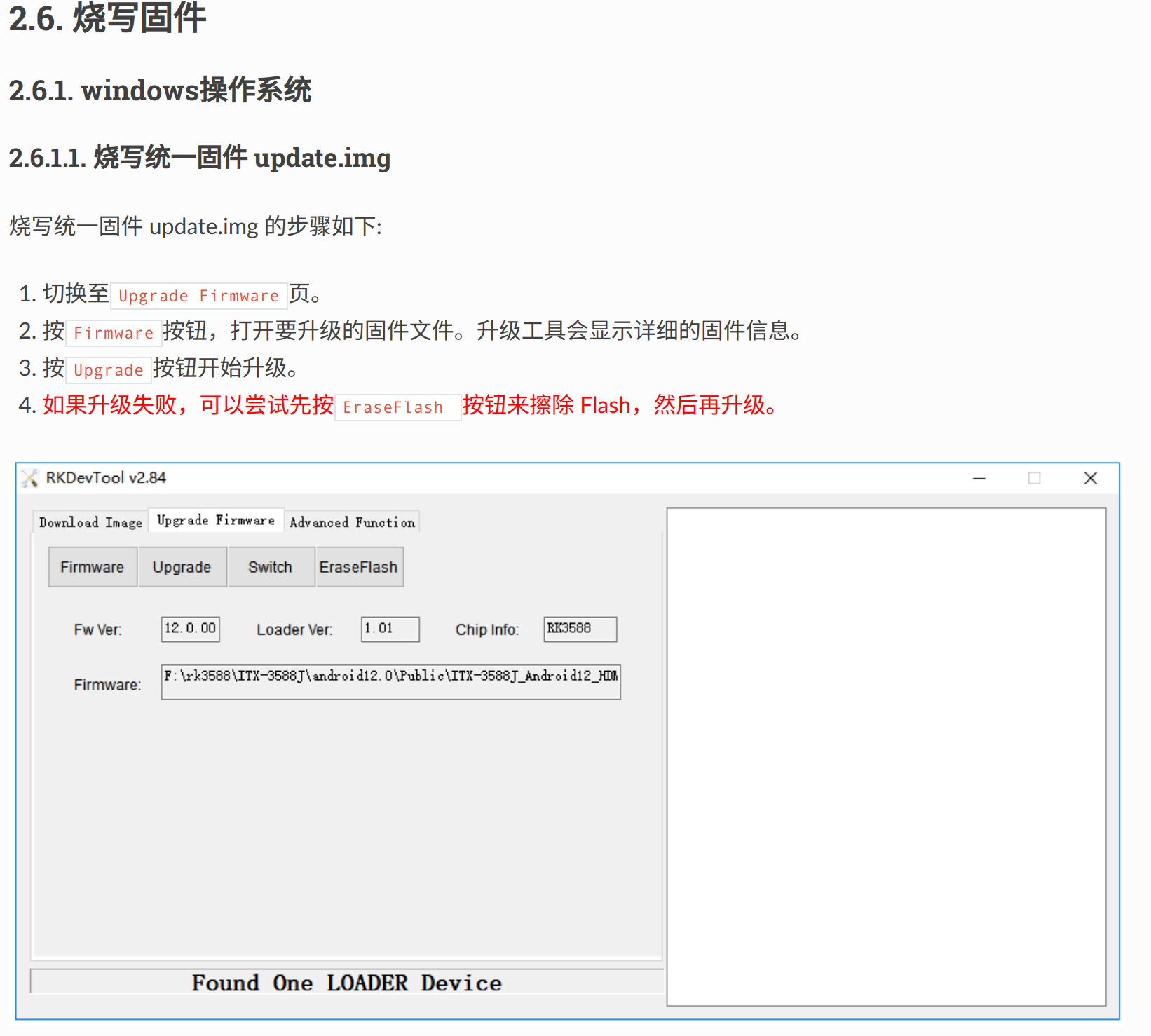

If the board is a newly acquired RK3588, it may not have anything written inside, including the bootloader, so you first need to preliminarily write to the board, writing Uboot, Linux, Rootfs, etc. It is recommended to write using RKDevTool on Windows.

You can directly download the update.img from this link. This image packages all the above content. Directly writing this image can achieve everything in one step, the specific operations are as follows:

- Connect the board to the computer via USB using the Type-C port.

- First, put the board in MaskRoom or Loader mode (can be recognized in RKDevTool)

- Loader mode: While powered on, press RST and BOOT buttons simultaneously, or enter

reboot loaderin Uboot, Linux. - MaskRoom mode: New boards default to this mode.

- Loader mode: While powered on, press RST and BOOT buttons simultaneously, or enter

- Operate in RKDevTool as shown below

Obtain RK3588 Kernel Image

RK3588 has a dedicated kernel image, the source code can be obtained through this link.

For ease and quick start, this kernel is already compiled, so the file is large. You can directly obtain the image through kernel/arch/arm64/boot/Image or use the existing .config to customize the required image.

Of course, if you are not concerned about the kernel source code, you can directly obtain the Image through this link.

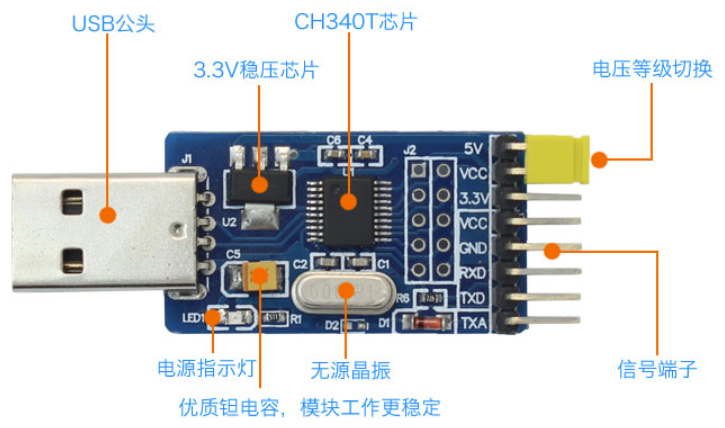

Serial Port Connection

RK3588's serial port is quite special, you need to purchase a USB to TTL converter for connection.

Connect RT-TX, TX-RD, GND-GND when connecting, and the baud rate of the serial port is 1500000 after connecting to the computer.

Re-write Uboot

To start hvisor, the original Uboot has no waiting time and will directly start Linux. Uboot needs to be rewritten, and a copy of Uboot and its writing tool is already prepared, which can be obtained through Uboot, Upgrade-tool.

Under the Linux system, also connect the board and the computer with USB and put it in Loader mode:

chmod +777 /path/to/upgrade_tool

sudo upgrade_tool di -u /path/to/uboot.img

Compile Hvisor and Device Tree

- Similar to other development boards, pull the latest code of hvisor from the repository: https://github.com/syswonder/hvisor. Enter the directory and compile hvisor:

make BID=aarch64/rk3588 - Enter

/images/aarch64/devicetree/,rk3588-root-aarch64-sdmmc.dtsis the device tree used by RK3588 RootLinux,rk3588-nonroot-aarch64.dtsis the device tree used by RK3588 NonRootLinux. You can compile them using the following commands:dtc -I dts -O dtb rk3588-nonroot-aarch64.dts -o ./linux1.dtb dtc -I dts -O dtb rk3588-root-aarch64-sdmmc.dts -o ./zone0.dtb

Create File System

Prepare an SD card, partition it into two, the first one in FAT32 format, size 1GB; the second one in EXT4 format.

Download the file system, unzip this file system to the EXT4 formatted partition.

Of course, you can also make it yourself, refer to Building a file system based on ubuntu_base.

Start RootLinux

TFTP

If a TFTP server is already set up, then you can start RootLinux in a convenient way, specifically:

- Copy Image, zone0.dtb, hvisor.bin to the ~/tftp folder

- Connect the host and the development board with an Ethernet cable, configure the host IP to

192.168.137.2, subnet mask255.255.255.0. - Simply power on and connect the serial port, uboot will automatically download the content from the tftp folder and start.

If you need to set up, you can refer to Rapid Development on Embedded Platforms - Tftp Server Setup and Configuration.

Without TFTP

At this time, the first FAT32 partition on the SD card comes into play, copy Image, zone0.dtb, hvisor.bin to this partition

- Start the development board, connect the serial port.

- Interrupt Uboot's automatic start.

- Enter the following commands to start RootLinux

fatload mmc 0:1 0x00480000 hvisor.bin;fatload mmc 0:1 0x10000000 zone0.dtb;fatload mmc 0:1 0x09400000 Image;bootm 0x00480000 - 0x10000000

Start NonRootLinux

Download Ready-Made Configuration Files

Through this link you can download usable NonRoot configurations, including Image, configuration files, rootfs, etc., unzip them to the RootLinux file system, which can help quickly start Nonroot, mainly starting devices like virtio-blk and virtio-console, or you can pass through devices according to your own needs.

Tips:

Please do not arbitrarily change the Image used for Rootlinux, as this will cause Nonroot to fail to start!

Compile hvisor-tool

Pull the latest code of Hvisor-tool: https://github.com/syswonder/hvisor-tool/tree/main, and compile:

make all ARCH=aarch64 LOG=LOG_WARN KDIR=RK3588 kernel source VIRTIO_GPU=n

For more details, please refer to the Readme.md of hvisor-tool.

Note, the kernel source must have been compiled, otherwise hvisor-tool will report an error because it cannot find the compiled product.

Tips:

The glibc version used when compiling hvisor-tool needs to ensure that the Rootlinux file system also supports it, otherwise hvisor-tool will not work properly!

For example, the Rootlinux Rootfs given in this article is Ubuntu 24.04. The Glibc version of the compiling machine should not be higher than the Glibc version of Ubuntu24.04, which is generally satisfied here since Ubuntu24.04 is newer.

Of course, the above operation is completed by aligning the glibc versions used by the two file systems, or you can directly compile hvisor-tool in the Rootlinux file system, or specify the Rootlinux file system directory on the compiling machine, so that the compilation link directly links the glibc of the Rootlinux file system, specifically as follows:

make all ARCH=aarch64 LOG=LOG_WARN KDIR=RK3588 kernel source VIRTIO_GPU=n \

ROOT=/path/to/target_rootfs

Start

Execute the following commands under Rootlinux

insmod hvisor.ko

nohup ./hvisor virtio start virtio_cfg.json &

./hvisor zone start zone1_linux.json

screen /dev/pts/0

You can then see the output of the second virtual machine (Nonroot).

Tips:

If you do not configure the Rootlinux file system according to the above process, or with version iterations, there may be situations where the ready-made configuration files are not usable, in which case you need to update the configuration yourself, and we will also follow up as soon as possible.

For the latest configuration format, you can refer to the example under hvisor-tool.

How to Compile

Compile using Docker

1. Install Docker

sudo snap install docker

You can also refer to the Docker Official Documentation to install Docker.

2. Build the Image

make build_docker

This step builds a Docker image, automatically compiling all required dependencies.

3. Run the Container

make docker

This step starts a container, mounts the current directory into the container, and enters the container's shell.

4. Compile

Execute the following command in the container to compile.

make all

Compile using the local environment

1. Install RustUp and Cargo

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | \

sh -s -- -y --no-modify-path --profile minimal --default-toolchain nightly

2. Install the Toolchain

The toolchain currently used by the project includes:

- Rust nightly 2023-07-12

- rustfmt

- clippy

- cargo-binutils

- rust-src

- llvm-tools-preview

- target: aarch64-unknown-none

You can check if these tools are installed yourself, or use the following commands to install them:

(1) Install toml-cli and cargo-binutils

cargo install toml-cli cargo-binutils

(2) Install the cross-compilation toolchain for the target platform

rustup target add aarch64-unknown-none

(3) Parse rust-toolchain.toml to install the Rust toolchain

RUST_VERSION=$(toml get -r rust-toolchain.toml toolchain.channel) && \

Components=$(toml get -r rust-toolchain.toml toolchain.components | jq -r 'join(" ")') && \

rustup install $RUST_VERSION && \

rustup component add --toolchain $RUST_VERSION $Components

(4) Compile

make all

How to Start Root Linux

QEMU

Install Dependencies

1. Install Dependencies

apt-get install -y jq wget build-essential \

libglib2.0-0 libfdt1 libpixman-1-0 zlib1g \

libfdt-dev libpixman-1-dev libglib2.0-dev \

zlib1g-dev ninja-build

1. Download and Extract QEMU

wget https://download.qemu.org/qemu-7.0.0.tar.xz

tar -xvf qemu-${QEMU_VERSION}.tar.xz

2. Conditionally Compile and Install QEMU

Here we only compile QEMU for emulating aarch64, if you need QEMU for other architectures, refer to QEMU Official Documentation.

cd qemu-7.0.0 && \

./configure --target-list=aarch64-softmmu,aarch64-linux-user && \

make -j$(nproc) && \

make install

3. Test if QEMU is Successfully Installed

qemu-system-aarch64 --version

Start Root Linux

1. Prepare Root File System and Kernel Image

Place the image file in hvisor/images/aarch64/kernel/, named Image.

Place the Root file system in hvisor/images/aarch64/virtdisk/, named rootfs1.ext4.

2. Start QEMU

Execute the following command in the hviosr directory:

make run

3. Enter QEMU

It will automatically load uboot, wait for uboot to finish loading, then enter bootm 0x40400000 - 0x40000000 to enter Root Linux.

How to Start NonRoot Linux

Hvisor has properly handled the startup of NonRoot, making it relatively simple, as follows:

-

Prepare the kernel image, device tree, and file system for NonRoot Linux. Place the kernel and device tree in the file system of Root Linux.

-

Specify the serial port used by this NonRoot Linux and the file system to be mounted in the device tree file for NonRoot Linux, as shown in the example below:

chosen {

bootargs = "clk_ignore_unused console=ttymxc3,115200 earlycon=ec_imx6q3,0x30a60000,115200 root=/dev/mmcblk3p2 rootwait rw";

stdout-path = "/soc@0/bus@30800000/serial@30a60000";

};

-

Compile the kernel module and command line tools for Hvisor and place them in the file system of Root Linux.

-

Start Hvisor's Root Linux and inject the kernel module that was just compiled:

insmod hvisor.ko

- Use the command line tool, here assumed to be named

hvisor, to start NonRoot Linux.

./hvisor zone start --kernel kernel image,addr=0x70000000 --dtb device tree file,addr=0x91000000 --id virtual machine number (starting from 1)

- After NonRoot Linux has started, open the specified serial port to use it.

Configuration and Management of Zones

The hvisor project, as a lightweight hypervisor, uses a Type-1 architecture that allows multiple virtual machines (zones) to run directly on top of hardware. Below is a detailed explanation of the key points for zone configuration and management:

Resource Allocation

Resources such as CPU, memory, devices, and interrupts are statically allocated to each zone, meaning that once allocated, these resources are not dynamically scheduled between zones.

Root Zone Configuration

The configuration of the root zone is hardcoded within hvisor, written in Rust, and represented as a C-style structure HvZoneConfig. This structure contains key information such as zone ID, number of CPUs, memory regions, interrupt information, physical addresses and sizes of the kernel and device tree binary (DTB).

Non-root Zones Configuration

The configuration of non-root zones is stored in the root Linux file system, usually represented in JSON format. For example:

{

"arch": "arm64",

"zone_id": 1,

"cpus": [2, 3],

"memory_regions": [

{

"type": "ram",

"physical_start": "0x50000000",

"virtual_start": "0x50000000",

"size": "0x30000000"

},

{

"type": "io",

"physical_start": "0x30a60000",

"virtual_start": "0x30a60000",

"size": "0x1000"

},

{

"type": "virtio",

"physical_start": "0xa003c00",

"virtual_start": "0xa003c00",

"size": "0x200"

}

],

"interrupts": [61, 75, 76, 78],

"kernel_filepath": "./Image",

"dtb_filepath": "./linux2.dtb",

"kernel_load_paddr": "0x50400000",

"dtb_load_paddr": "0x50000000",

"entry_point": "0x50400000"

}

- The

archfield specifies the target architecture (e.g., arm64). cpusis a list that indicates the CPU core IDs allocated to the zone.memory_regionsdescribe different types of memory regions and their physical and virtual start addresses and sizes.interruptslist the interrupt numbers allocated to the zone.kernel_filepathanddtb_filepathindicate the paths of the kernel and device tree binary files, respectively.kernel_load_paddranddtb_load_paddrare the physical memory load addresses for the kernel and device tree binary.entry_pointspecifies the kernel's entry point address.

The management tool of root Linux is responsible for reading the JSON configuration file and converting it into a C-style structure, which is then passed to hvisor to start the non-root zones.

Command Line Tool

The command line tool is an auxiliary management tool for hvisor, used to create and shut down other virtual machines on the managed virtual machine Root Linux, and is responsible for starting the Virtio daemon, providing Virtio device emulation. The repository is located at hvisor-tool. For specific usage, please see the README.

Using VirtIO Devices

For specific usage tutorials, please refer to: hvisor-tool-README

hvisor Overall Architecture

-

CPU Virtualization

- Architecture Compatibility: Supports architectures such as aarch64, riscv64, and loongarch, with dedicated CPU virtualization components for each architecture.

- CPU Allocation: Uses static allocation method, pre-determining the CPU resources for each virtual machine.

-

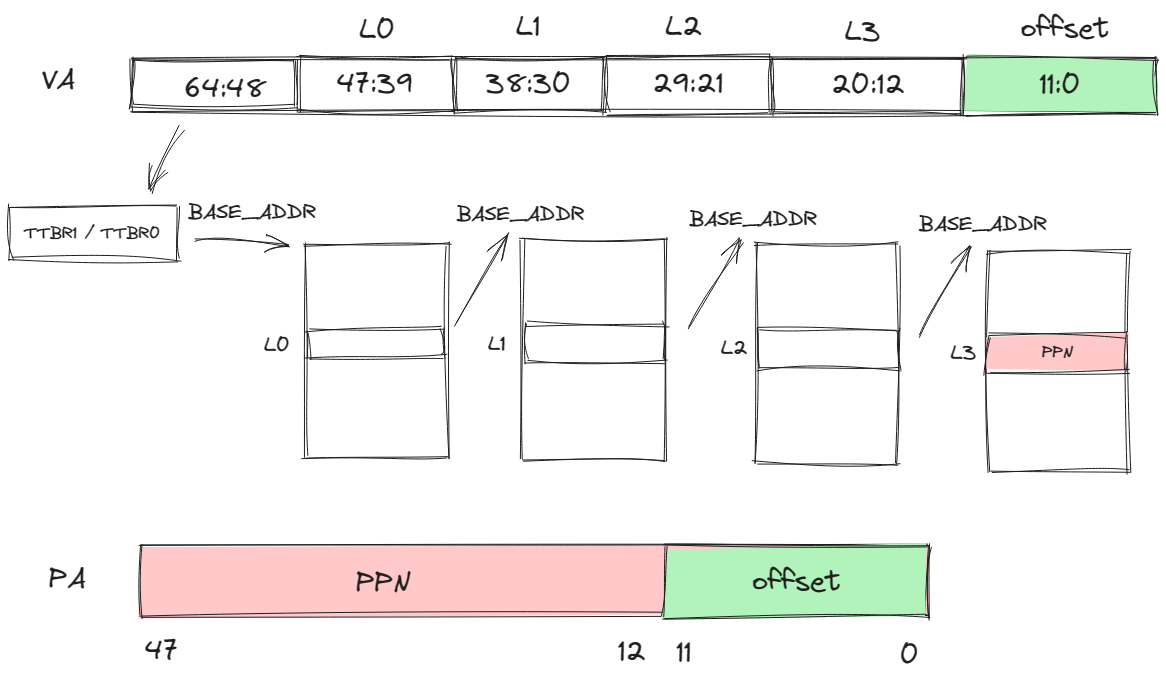

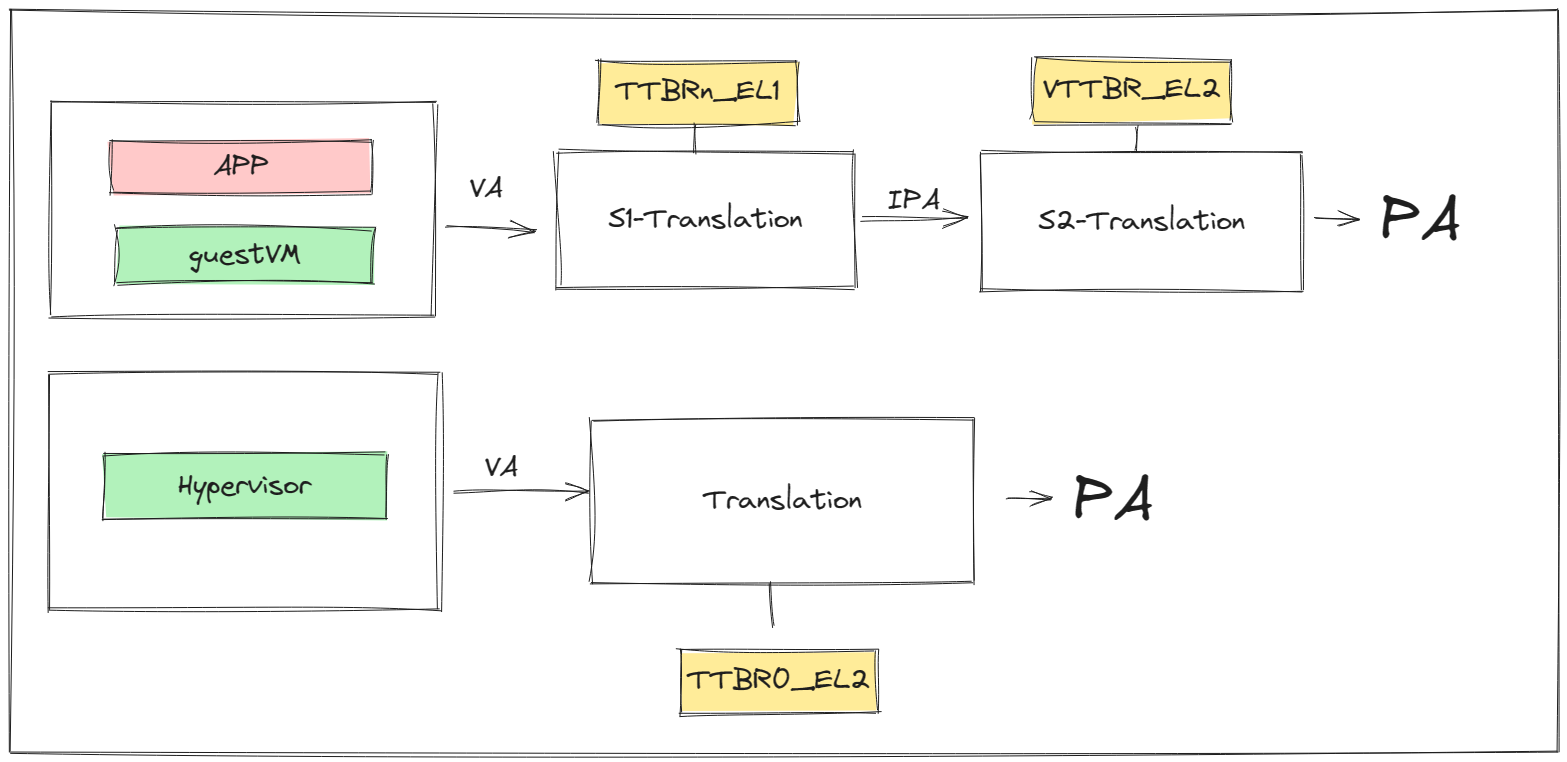

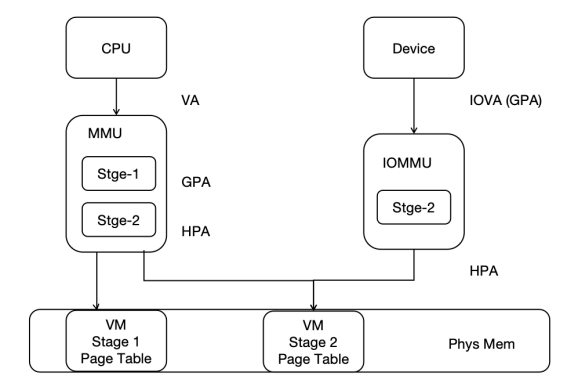

Memory Virtualization

- Two-stage Page Table: Utilizes two-stage page table technology to optimize the memory virtualization process.

-

Interrupt Virtualization

- Interrupt Controller Virtualization: Supports virtualization of different architecture's interrupt controllers like ARM GIC and RISC-V PLIC.

- Interrupt Handling: Manages the transmission and processing flow of interrupt signals.

-

I/O Virtualization

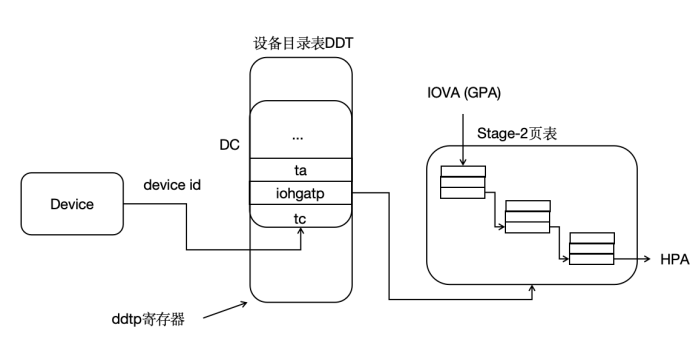

- IOMMU Integration: Supports IOMMU to enhance the efficiency and security of DMA virtualization.

- VirtIO Standard: Follows the VirtIO specification, providing high-performance virtual devices.

- PCI Virtualization: Implements PCI virtualization, ensuring virtual machines can access physical or virtual I/O devices.

Initialization Process of hvisor

Abstract: This article introduces the relevant knowledge involved in running hvisor on qemu and the initialization process of hvisor. Starting from the launch of qemu, the entire process is tracked, and after reading this article, you will have a general understanding of the initialization process of hvisor.

Boot Process of qemu

The boot process of the computer simulated by qemu: After loading the necessary files into memory, the PC register is initialized to 0x1000, and a few instructions are executed from here before jumping to 0x80000000 to start executing the bootloader (hvsior arm part uses Uboot). After executing a few instructions, it jumps to the starting address of the kernel that uboot can recognize.

Generate the executable file of hvisor

rust-objcopy --binary-architecture=aarch64 target/aarch64-unknown-none/debug/hvisor --strip-all -O binary target/aarch64-unknown-none/debug/hvisor.bin.tmp

Convert the executable file of hvisor into a logical binary and save it as hvisor.bin.tmp.

Generate an image file recognizable by uboot

Uboot is a bootloader whose main task is to jump to the first instruction of the hvisor image and start execution, so it is necessary to ensure that the generated hvisor image is recognizable by uboot. Here, the mkimage tool is needed.

mkimage -n hvisor_img -A arm64 -O linux -C none -T kernel -a 0x40400000 -e 0x40400000 -d target/aarch64-unknown-none/debug/hvisor.bin.tmp target/aarch64-unknown-none/debug/hvisor.bin

-n hvisor_img: Specify the name of the kernel image.-A arm64: Specify the architecture as ARM64.-O linux: Specify the operating system as Linux.-C none: Do not use compression algorithms.-T kernel: Specify the type as kernel.-a 0x40400000: Specify the loading address as0x40400000.-e 0x40400000: Specify the entry address as0x40400000.-d target/aarch64-unknown-none/debug/hvisor.bin.tmp: Specify the input file as the previously generated temporary binary file.- The last parameter is the output file name, i.e., the final kernel image file

hvisor.bin.

Initialization Process

aarch64.ld Link Script

To understand how hvisor is executed, we first look at the link script aarch64.ld, which gives us a general understanding of the execution process of hvisor.

ENTRY(arch_entry)

BASE_ADDRESS = 0x40400000;

The first line sets the program entry arch_entry, which can be found in arch/aarch64/entry.rs, introduced later.

.text : {

*(.text.entry)

*(.text .text.*)

}

We make the .text segment the first segment, and place the .text.entry containing the first instruction of the entry at the beginning of the .text segment, ensuring that hvisor indeed starts execution from the 0x40400000 location agreed with qemu.

Here we also need to remember something called __core_end, which is the address of the end position of the link script, and its role can be known during the startup process.

arch_entry

With the above prerequisites, we can step into the first instruction of hvisor, which is arch_entry().

// src/arch/aarch64/entry.rs

pub unsafe extern "C" fn arch_entry() -> i32 {

unsafe {

core::arch::asm!(

"

// x0 = dtbaddr

mov x1, x0

mrs x0, mpidr_el1

and x0, x0, #0xff

ldr x2, =__core_end // x2 = &__core_end

mov x3, {per_cpu_size} // x3 = per_cpu_size

madd x4, x0, x3, x3 // x4 = cpuid * per_cpu_size + per_cpu_size

add x5, x2, x4

mov sp, x5 // sp = &__core_end + (cpuid + 1) * per_cpu_size

b {rust_main} // x0 = cpuid, x1 = dtbaddr

",

options(noreturn),

per_cpu_size=const PER_CPU_SIZE,

rust_main = sym crate::rust_main,

);

}

}

First, look at the embedded assembly part. The first instruction mov x1, x0 transfers the value in the x0 register to the x1 register, where x0 contains the address of the device tree. Qemu simulates an ARM architecture computer, which also has various devices such as mice, display screens, and various storage devices. When we want to get input from the keyboard or output to the display, we need to get input from somewhere or put the output data somewhere. In the computer, we use specific addresses to access these devices. The device tree contains the access addresses of these devices, and the hypervisor, as the general manager of all software, naturally needs to know the information of the device tree. Therefore, Uboot will put this information in x0 before entering the kernel, which is a convention.

In mrs x0, mpidr_el1, mrs is an instruction to access system-level registers, which means to send the contents of the system register mpidr_el1 to x0. mpidr_el1 contains information about which CPU we are currently dealing with (the computer supports multi-core CPUs), and there will be a lot of cooperation work with the CPU later, so we need to know which CPU is currently in use. This register contains a lot of information about the CPU, and we currently need to use the lower 8 bits to extract the corresponding CPU id, which is what the instruction and x0, x0, #0xff is doing.

ldr x2, = __core_end, at the end of the link script, we set a symbol __core_end as the end address of the entire hvisor program space, and put this address into x2.

mov x3, {per_cpu_size} puts the size of each CPU's stack into x3. This {xxx} is to replace the value of xxx defined externally into the assembly code. You can see that the parameter below per_cpu_size=const PER_CPU_SIZE has changed the name of an external variable as a parameter. Another parameter with sym indicates that a symbol follows, which is defined elsewhere.

per_cpu_size in this size space, related registers can be saved and restored, including the CPU's stack space.

madd x4, x0, x3, x3 is a multiply-add instruction, cpu_id * per_cpu_size + per_cpu_size, and the result is put into x4. At this point, x4 contains the total space required by the current number of CPUs. (Starting from 0, so add per_cpu_size one more time).

add x5, x2, x4 means to add the end address of hvisor and the total space required by the CPU to x5.

mov sp, x5 finds the top of the current CPU's stack.

b {rust_main} represents jumping to rust_main to start execution, which also indicates that this section of assembly code will not return, corresponding to option(noreturn).

Enter rust_main()

fn rust_main(cpuid: usize, host_dtb: usize)

Entering rust_main requires two parameters, which are passed through x0 and x1. Remember that in the previous entry, our x0 stored the cpu_id and x1 stored the device tree information.

install_trap_vector()

When the processor encounters an exception or interrupt, it needs to jump to the corresponding location for processing. Here, the corresponding jump addresses are set (which can be considered as setting a table) for handling exceptions at the Hypervisor level. Each privilege level has its own corresponding exception vector table, except for EL0, the application privilege level, which must jump to other privilege levels to handle exceptions. The VBAR_ELn register is used to store the base address of the exception vector table for the ELn privilege level.

extern "C" {

fn _hyp_trap_vector();

}

pub fn install_trap_vector() {

// Set the trap vector.

VBAR_EL2.set(_hyp_trap_vector as _)

}

VBAR_EL2.set() sets the address of _hyp_trap_vector() as the base address of the exception vector table for the EL2 privilege level.

_hyp_trap_vector() This assembly code constructs the exception vector table.

Simple Introduction to the Exception Vector Table Format

Based on the level of the exception and whether the level of handling the exception remains the same, it is divided into two categories. If the level does not change, it is divided into two groups based on whether the current level's SP is used. If the exception level changes, it is divided into two groups based on whether the execution mode is 64-bit/32-bit. Thus, the exception vector table is divided into 4 groups. In each group, each table entry represents an entry point for handling a specific type of exception.

Main CPU

static MASTER_CPU: AtomicI32 = AtomicI32::new(-1);

let mut is_primary = false;

if MASTER_CPU.load(Ordering::Acquire) == -1 {

MASTER_CPU.store(cpuid as i32, Ordering::Release);

is_primary = true;

println!("Hello, HVISOR!");

#[cfg(target_arch = "riscv64")]

clear_bss();

}

static MASTER_CPU: AtomicI32 In this, AtomicI32 indicates that this is an atomic type, meaning its operations are either successful or fail without any intermediate state, ensuring safe access in a multi-threaded environment. In short, it is a very safe i32 type.

MASSTER_CPU.load() is a method for performing read operations. The parameter Ordering::Acquire indicates that if there are some write operations before I read, I need to wait for these write operations to be completed in order. In short, this parameter ensures that the data is correctly changed before being read.

If it reads -1, the same as when it was defined, it indicates that the main CPU has not been set, so set cpu_id as the main CPU. Similarly, the role of Ordering::Release is certainly to ensure that all other modifications are completed before the change.

Common Data Structure for CPUs: PerCpu

hvisor supports different architectures, and a reasonable system design should allow different architectures to use a unified interface for easy description of each part's work. PerCpu is such a general CPU description.

pub struct PerCpu {

pub id: usize,

pub cpu_on_entry: usize,

pub arch_cpu: ArchCpu,

pub zone: Option<Arc<RwLock<Zone>>>,

pub ctrl_lock: Mutex<()>,

pub boot_cpu: bool,

// percpu stack

}

For each field of PerCpu:

id: CPU sequence numbercpu_on_entry: The address of the first instruction when the CPU enters EL1, also known as the guest. Only when this CPU is the boot CPU will it be set to a valid value. Initially, we set it to an inaccessible address.arch_cpu: CPU description related to the architecture. The behavior is initiated byPerCpu, and the specific executor isarch_cpu.cpu_idpsci_on: Whether the cpu is started

zone: zone actually represents a guestOS. For the same guestOS, multiple CPUs may serve it.ctrl_lock: Set for concurrent safety.boot_cpu: For a guestOS, it distinguishes the main/secondary cores serving it.boot_cpuindicates whether the current CPU is the main core for a guest.

Main Core Wakes Up Other Cores

if is_primary {

wakeup_secondary_cpus(cpu.id, host_dtb);

}

fn wakeup_secondary_cpus(this_id: usize, host_dtb: usize) {

for cpu_id in 0..MAX_CPU_NUM {

if cpu_id == this_id {

continue;

}